Length

6 min read

Generative AI (GenAI) promised a UX revolution, a leap from “if X, then always Y” to a world of endless possibilities. But instead, we’re seeing a surge of flawed designs and wasted research. Why? Because many UX professionals are making critical tactical blunders, mistaking GenAI for a magic bullet instead of a powerful, but nuanced, tool. This isn’t just about avoiding the “AI will steal my job” fearmongering; it’s about confronting the subtle, often counterintuitive, ways GenAI is warping our UX practices.

The illusion of efficiency: Why GenAI could be making you lazy.

Mistake #1: Replacing user empathy with synthetic data.

It’s tempting to think GenAI can replace those messy user interviews, workshops, and feedback sessions. Just feed it some data and poof – instant insights, right? Wrong. While GenAI can analyse large datasets and even generate synthetic user data, it cannot replace the empathy and contextual understanding that comes from real human interaction.

The paradox: GenAI can reveal “latent needs” hidden in data patterns, needs even experienced researchers might miss. For instance, GenAI may have identified a sudden drop in user engagement correlated with a specific UI element, suggesting a hidden usability issue. However, only by conducting in-depth interviews could you understand the why – were users not able to understand the element? was the expected interaction unclear? did they find the interaction obtrusive?

How to fix it:

- Use GenAI to augment not replace: Let it help analyse transcripts, identify patterns, and summarise findings, but always validate those insights with real user research. For example, you can use GenAI tools like Dovetail, MiroAI and ChatGPT or Gemini to help analyse user interview transcripts and find key themes, but you should always inspect the generated analysis for validity and follow up with qualitative research to understand the nuances behind any emerging themes.

- Focus on qualitative research: GenAI struggles with the nuances of human emotion and behaviour. Prioritise methods like ethnographic studies and in-depth interviews to understand the “why” behind user motivations and the context of user expectations so you can best deliver a fix that best facilitates the actions needed.

- Don’t neglect quantitative data: GenAI probably can’t see your organisation’s “first party” data, but it can be a key source of insights about your customers. Collecting and managing actual behavioural data is a real competitive advantage.

The danger of blind faith: GenAI’s hallucinations and the erosion of trust.

Mistake #2: Accepting GenAI outputs without scrutiny.

A critical error in using GenAI lies in blindly trusting its outputs. These tools, susceptible to ‘hallucinations,’ often generate inaccurate or misleading information. Accepting these outputs without scrutiny can lead to detrimental design flaws and skewed research findings.

The surprise: User trust in AI isn’t just about accuracy. Recent studies suggest user trust in AI is heavily dependent on transparency, control, and explainability. Without understanding the AI’s reasoning, user confidence plummets.

How to fix it:

- Fact-check everything: Always verify GenAI-generated insights with reliable sources and your own expertise.

Is the information consistent with other reliable sources?- Can you trace the AI’s reasoning back to its training data?

- Does the output align with your existing understanding of user behaviour?

- Don’t use just one GenAI tool: Cross-reference GenAI outputs with multiple sources and demand clear explanations of the AI’s decision-making process.

- Understand the limitations: Be aware of the biases that might exist in the training data and how they could influence the outputs.

- Embrace transparency: If using GenAI to generate content or designs, be upfront about it and allow users to verify the information.

- Design for trust: Provide users with insights into the AI’s decision-making process and give them control over the AI’s outputs. For example, allow humans to edit or refine AI-generated content or provide them with the ability to see the sources used by the AI.

The art of the prompt: GenAI’s Achilles’ heel.

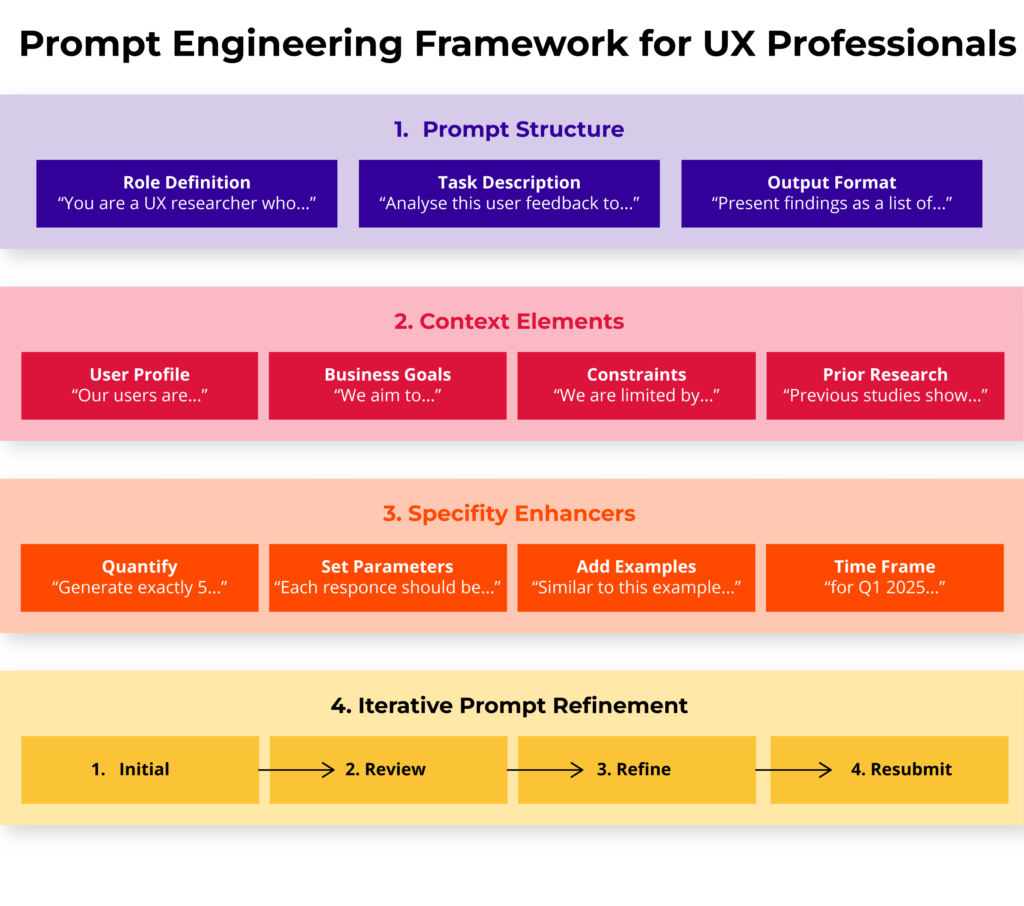

Mistake #3: Neglecting the importance of prompt engineering.

GenAI tools are only as good as the prompts they receive. Vague prompts yield vague results. GenAI is a powerful tool, but it requires precise instructions.

The insight: Prompt engineering is becoming a core UX skill, blurring the lines between researcher and data scientist.

How to fix it:

- Be clear and specific: Use the imperative voice, positive language, and break down complex questions into smaller parts.

- Provide context: Give the model the information it needs to understand your request and generate relevant outputs. For example, if you’re asking the AI to generate design ideas, provide it with information about the target user, the product’s purpose, and any relevant design constraints.

- Iterate and refine: Experiment with different prompts or chain your prompts and analyse the results to find what works best.

The ethical minefield: GenAI and the future of user trust.

The ethical minefield: GenAI and the future of user trust.

Mistake #4: Overlooking ethical considerations.

GenAI raises critical ethical questions about data privacy, bias, and transparency. Ignoring these can lead to legal nightmares and irreparable brand damage.

The unexpected consequence: The legal and regulatory landscape surrounding GenAI is rapidly evolving, creating new compliance challenges for UX teams.

How to fix it:

- Prioritise data privacy: Ensure you have proper consent to use user data in training models and that the GenAI tool complies with privacy regulations.

- Use responsible AI development practices: Use zero-party or first-party data whenever possible, ensure the training data is fresh, well-labelled, and diverse, and incorporate human oversight in the AI development process.

- Mitigate bias: Be aware of potential biases in the training data and take steps to address them. This might involve using techniques like data augmentation or bias detection tools.

- Be transparent: Communicate how you are using GenAI and address any ethical concerns users might have.

- Test continuously: Regularly test the AI system for bias and accuracy to ensure it is performing as expected.

The strategic void: GenAI without purpose.

Mistake #5: Not Aligning GenAI use with business goals.

Simply adopting GenAI without strategic alignment with core business goals is a recipe for inefficiency and wasted effort. True success with GenAI tools requires a clear understanding of how it can directly support and enhance established goals. Without this alignment, organisations risk implementing technology that does not deliver tangible value and may even detract from existing workflows.

How to fix it:

- Define clear objectives: Identify specific problems that GenAI can help you solve and set measurable goals. For example, if you’re using GenAI to analyse user research data, your goal might be to reduce the time spent on analysis by 50%.

- Choose the right tools: Select GenAI tools that align with your needs and integrate them into your existing workflows.

- Measure the impact: Track the effectiveness of your GenAI initiatives and adjust as needed. This might involve tracking metrics like time saved, cost reduction, or improvement in user satisfaction.

- Actionable tip: Develop a framework for aligning GenAI initiatives with your organisation’s strategic priorities.

GenAI done right: Examples of effective use.

When used strategically, GenAI can be a powerful asset for UX professionals. Here are some examples:

- Analysing user research data: GenAI can quickly identify patterns and themes in large datasets, freeing up researchers to focus on deeper analysis. Tools like Thematic and Notably can analyse user feedback from various sources (surveys, interviews, social media) and identify key themes and sentiment.

- Generating design ideas: GenAI can help designers explore a wider range of possibilities and create personalised user interfaces. For example, designers can use tools like UiZard and GalileoAI to quickly generate different design concepts based on user preferences and feedback. These concepts can be tested with real users and iterated upon in close to real-time.

- Automating repetitive tasks: GenAI can handle tasks like creating UI documentation or generating code, allowing designers to focus on more creative work. Tools like Relume.io can automatically generate UI documentation from design files, while platforms like GitHub Copilot can aid with code generation.

- Creating user journey maps: GenAI can help visualise user journeys by analysing user data and generating flowcharts or diagrams that illustrate the steps users take to achieve a goal.

- Aligning AI models with real-world user interactions: UX researchers can use early prototypes and user feedback to refine GenAI models and ensure they align with real-world user needs and behaviours. This might involve collecting data on how users interact with AI-powered prototypes and using that data to fine-tune the AI model.

GenAI is not a magic bullet, but it can be a valuable tool when used correctly. It offers significant benefits, such as increased efficiency, enhanced creativity, and the ability to personalise user experiences. However, it’s crucial to be mindful of its limitations, including the potential for bias, the need for human oversight, and the ethical considerations surrounding data privacy and responsible AI development.

How will your team adapt to the challenges of GenAI-powered UX? Are you prepared for the next wave of user expectations?

Remember, the future of UX lies in the synergy between human expertise and AI capabilities. Let’s build that future together.